Cooperative perception offers several benefits for enhancing the capabilities of autonomous

vehicles and improving road safety. Using roadside sensors in addition to onboard sensors

increases reliability and extends the sensor range. They offer a higher situational awareness

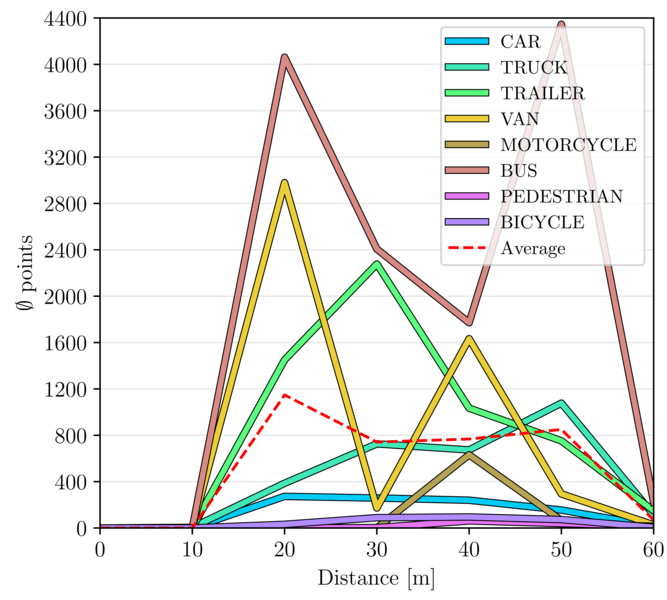

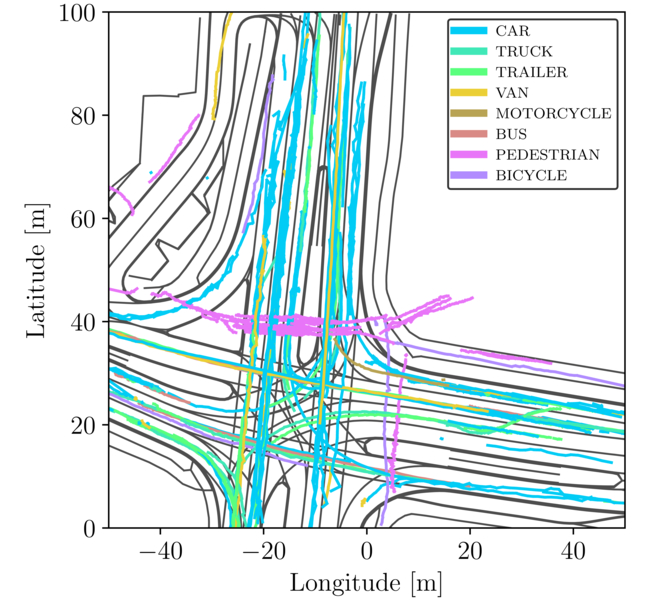

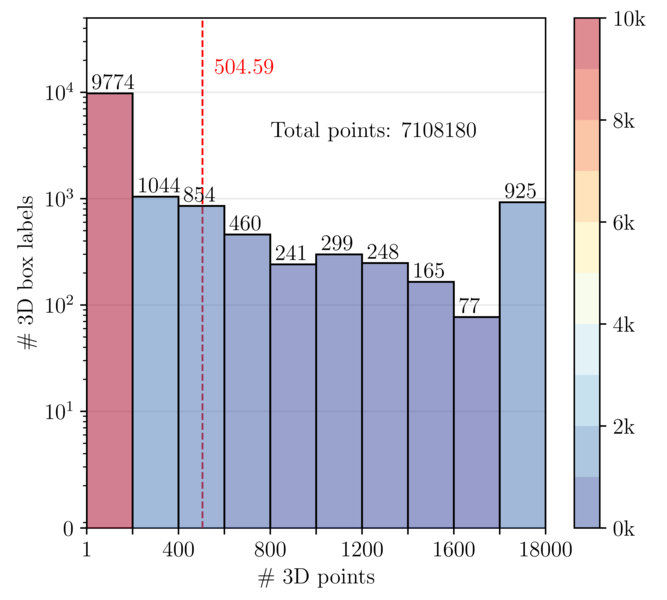

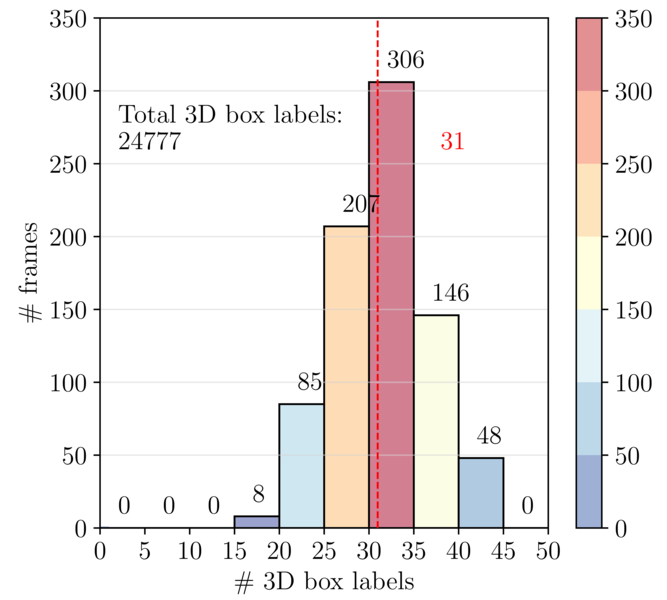

for automated vehicles and prevent occlusions. We propose CoopDet3D, a cooperative multi-modal

fusion model, and TraffiX, a V2X dataset, for the cooperative 3D object detection and tracking

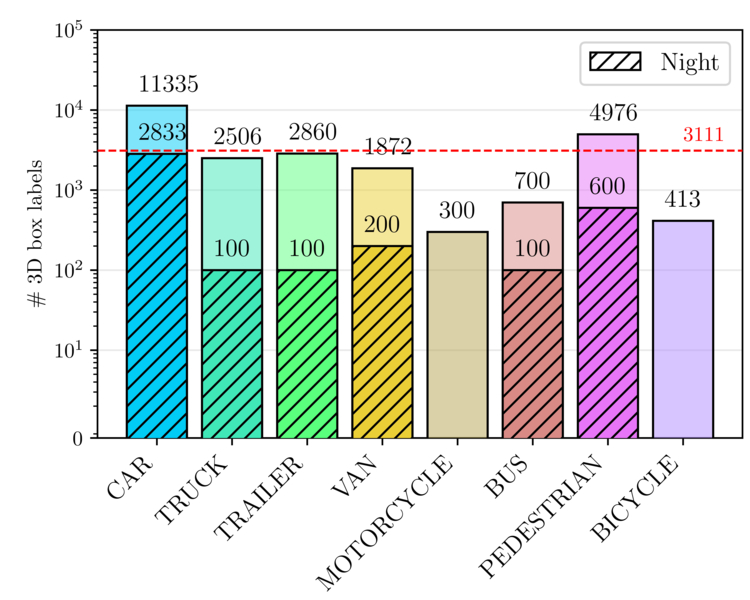

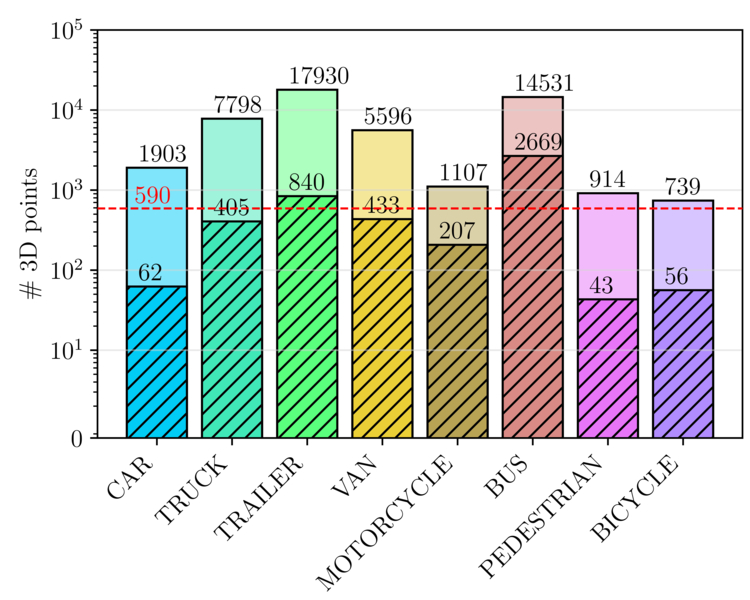

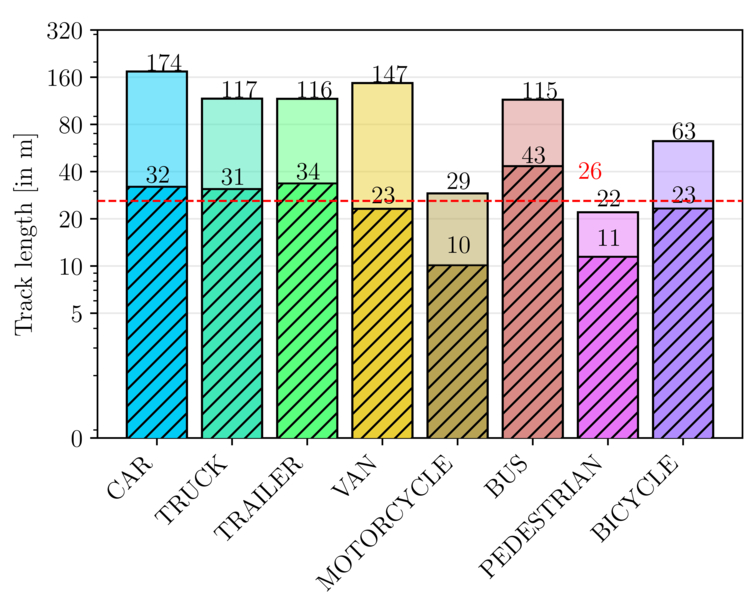

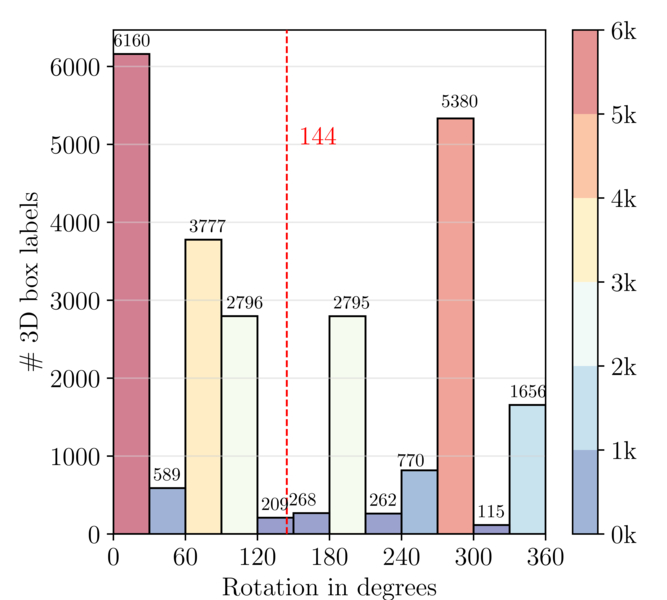

task. Our dataset contains 1,600 labeled point clouds and 4,000 labeled images from five

roadside and four onboard sensors. It includes 50k 3D boxes with track IDs and precise GPS and

IMU data. We labeled eight categories and covered occlusion scenarios with challenging driving

maneuvers, like traffic violations, near-miss events, overtaking, and U-turns. Through multiple

experiments, we show that our CoopDet3D camera-LiDAR fusion model achieves an increase of +14.36

3D mAP compared to a vehicle camera-LiDAR fusion model. Finally, we make our model, dataset,

labeling tool, and development kit publicly available to advance in connected and automated

driving.